Why are Scrolling Messages Always in Italics?

26 Mar 2014, 17:45 UTC

I was on a train a while ago, bored, and I noticed (for the Nth time) that the characters on the scrolling display looked slanted. I found myself pondering why that would be - especially since it's impossible for them to be slanted! LED displays are laid out on a rectangular grid, and each character is only a few LEDs wide - you couldn't do italics on that display if you wanted to!

I knew that LED displays are scanned. Only one row of LEDs is actually lit at one time, but by repeatedly scanning top-to-bottom, persistence of vision makes us see a solid image where it appears all the rows are switched on at once. So maybe this scanning effect somehow causes the italics to appear.

After a little thought, I came up with the following hypothesis: let us assume that the brain "wants" to see a solid image, i.e. it "wants" to interpret the LEDs (which are in fact flashing very fast) as a single coherent non-flashing object. This might be similar to a situation where an early human is trying to see a predator animal moving in a jungle environment, while both are running. The predator would disappear behind trees and vines, then reappear, then disappear, all at a fairly high frequency. The brain needs to reconstruct the predator from this information.

Since the letters are scrolling, the virtual object is obviously moving, and we assume the brain can detect that.

So say we had a real moving object with LEDs attached to it which were flashing in the same row-based sequence. Which object corresponds to the flashes of light which the display in fact outputs?

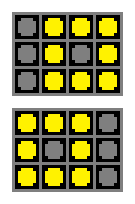

Let's examine the scanout for a simple 4x3 LED matrix. A square is scrolling right-to-left. The flipbook looks like this:

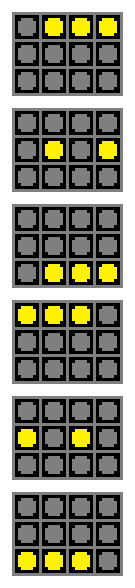

But the display actually changes three times faster than this, and only one scanline is lit on any time step. So the actual timeline looks more like this:

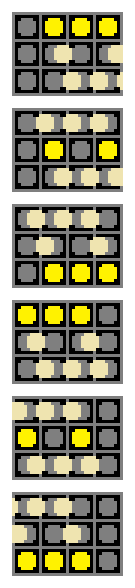

Now, since the 2nd scanline (for example) is lit later than the first scanline, the virtual object has moved a little left in that time. So although the 2nd scanline is directly under the 1st scanline on the display, the part of the object now at that position is a little to the right of the part of the object displayed on the 1st scanline. If we follow this logic through we recreate the following smoothly-moving object which gives the correct outputs on the LEDs:

Hence the impossible italics! At most times, most points on the object are not even lined up with an LED!

Consequences

The consequences of this hypothesis are actually quite staggering.

Firstly, the brain (meaning everything beyond the light sensors in the retina) is capable of using signal timing which is much faster than the basic "frame rate" given by persistence of vision. It's not clear from this one datum exactly what the limiting rate is, though.

Here's another train experiment: look out of the window when the train is moving. If you keep your eyes fixed you see just a blur, but it's easy to latch onto some object and track it with your eyes. At that point, the object becomes clear and in-focus. It seems unlikely the eyes can track with this much accuracy, so the presumption is that some image processing is also involved - and this is applied to very fast signals indeed.

So perhaps the brain uses a multi-rate processing paradigm, where the low-level motion detection works on essentially analog (in time) signals, and then higher-levels work on scene scale and at that scale the averaging-out is performed which smooths out things like flashing LEDs, and gives persistence of vision.

Secondly, the way old-fashioned TV worked was "correct". The camera scanned the scene and the display scanned the image and both were synchronized. Thus, for fast-moving images, the brain would have been capable of reconstructing the moving objects. In some sense, then, we have gone backwards, since TVs are no longer synchronized to cameras, and can use arbitrary scanouts (including e.g. 100Hz displays on 50Hz source material). It might be worth experimenting with old-fashioned TVs fed with digital signals, to try to create improved moving images by applying the correct shear according to the above theory. In fact, there is a paper on this exact topic, called Just In Time Pixels.

To make a suitably "correct" moving image on a given screen, we would need to know the exact scanout pattern of that screen. Perhaps one day HDMI could be upgraded to supply this information to the application - for now, the importance of these details is not appreciated.

Thirdly, this result may have important ramifications for visual processing in the VR environment (e.g. Oculus Rift). Nausea may be reduced, and visual fideltiy increased, by using the Just In Time Pixels idea - although that places very heavy constraints on the rendering system.

That's all for now - please comment if you have any alternative explanations for the italics, or any more consequences of this result occur to you. Also, please comment if you've seen the italics, and know what I'm talking about - not everyone does, which means either my brain works differently, or other people are just less observant :p